Understanding Large Language Models (LLMs)

Introduction

As artificial intelligence continues to evolve, Large Language Models (LLMs) have emerged as a cornerstone of modern AI applications. Their ability to comprehend, generate, and interact using human language has made them indispensable in multiple sectors. This section delves into what LLMs are, how they function, and the different types that serve various needs.

Large Language Models (LLMs) are a significant breakthrough in artificial intelligence, designed to help machines understand and generate human language. These models are built using billions of parameters and trained on vast amounts of text data. As a result, large language model AI systems can predict text, translate languages, and even hold conversations, making them a key tool in today’s tech landscape.

From powering virtual assistants to automating customer support and creating content, LLMs are transforming various industries. In this blog, we’ll know how LLMs work, their unique capabilities, and some important statistics that highlight their rapid growth and influence.

What Are Large Language Models (LLMs)?

Large Language Models (LLMs) are advanced artificial intelligence systems built on deep learning architectures, particularly transformer models. They are trained on extensive datasets, enabling them to understand and generate human-like text. LLMs excel at a variety of natural language processing tasks, including text recognition, translation, prediction, and content generation. To put it simply, experts define large language model technology as a system capable of understanding complex relationships between words and phrases in context.

An LLM can carry out a wide range of tasks, from writing fluent text to translating languages and answering detailed questions. Its capabilities are tied to its size—measured in billions of parameters, which allows it to understand linguistic subtleties. Curious minds often ask, How do large language models work? At their core, these models analyze patterns in vast text datasets to predict and generate meaningful language. OpenAI’s GPT-3, with 175 billion parameters, is a prime example of this advanced capability.

Key Statistics on Large Language Models (LLMs): Growth, Capabilities, and Industry Impact

Large Language Models (LLMs) are transforming industries with their advanced capabilities and rapid adoption. Below are key statistics that highlight their growth, performance, and impact across various sectors.

- According to Fortune Business Insights, the global AI market, driven significantly by advancements in large language models, is projected to grow from $515.31 billion in 2023 to $1.59 trillion by 2030, with a CAGR of 22.4% from 2023 to 2030.

- According to OpenAI, GPT-3, one of the most well-known LLMs, contains 175 billion parameters and was trained on 45 terabytes of text data. LLMs have reduced error rates in NLP tasks by 20-30% compared to earlier models, improving performance in machine translation, summarization, and sentiment analysis.

- According to Gartner, by 2024, 75% of enterprises are expected to use AI-based solutions, including LLMs, for enhancing operational efficiency and customer engagement.

- According to Lambda Labs, training GPT-3 is estimated to have cost around $12 million, showcasing the immense computational and financial resources required to build such models.

- According to IDC, the use of LLMs is contributing to an increase in demand for AI professionals. The AI job market is expected to reach $1.25 trillion by 2025.

- According to MIT Technology Review, LLMs have improved natural language understanding accuracy by 20-30% over previous models, making them more effective for applications like chatbots, content generation, and translation.

- According to McKinsey & Company, 75% of top companies globally have integrated LLM-based systems into their operations, primarily for chatbots, automated customer service, and content generation. (Source: McKinsey & Company)

- According to GitHub, GitHub’s Copilot, which is powered by OpenAI’s Codex LLM, is used by over 1.5 million developers, with 88% of users reporting increased coding efficiency. (Source: GitHub)

How LLMs Work: The Basics of Training and Performance

LLMs operate by predicting the next word in a sentence based on the context of previous words, a method known as autoregressive text generation. The model is pre-trained on a large corpus of text data (often sourced from books, websites, and articles) and fine-tuned for specific tasks. The underlying large language model architecture is usually a transformer, a type of neural network that excels in tasks involving sequential data, such as text.

Key Steps in Training LLMs:

Training Large Language Models (LLMs) involves critical steps like data collection, tokenization, and iterative learning through pre-training and fine-tuning. These processes enable LLMs to grasp language nuances and perform effectively across diverse applications.

- Pre-Training: During this phase, the model learns general language representations by processing vast amounts of data. The model’s primary task is to predict missing or next words, enabling it to understand grammar and structure.

- Fine-Tuning: After pre-training, the model is fine-tuned on task-specific data, making it more effective at performing specific tasks like sentiment analysis, summarization, or translation.

- Inference: Once trained, the model is used for inference, where it generates text, answers questions, or performs other language-related tasks based on input prompts.

Understanding the Performance of Large Language Models

The performance of Large Language Models (LLMs) showcases their remarkable ability to understand and generate human-like text, driving innovation across various fields. By leveraging advanced metrics and contextual insights, LLMs continue to enhance user experiences and deliver impactful solutions.

Contextual Understanding

LLMs generate coherent responses by leveraging input context, allowing for nuanced conversations. Their attention mechanisms analyze relationships between words, enhancing comprehension and relevance.

Language Generation

These models excel at producing human-like text, making them ideal for applications like chatbots and content creation. They ensure fluency and maintain relevance in their responses.

Versatility

LLMs can adapt to various tasks, such as translation, summarization, and creative writing. This flexibility enables deployment across multiple industries, driving innovation and efficiency.

Limitations

While powerful, LLMs may generate biased or inappropriate content, reflecting the limitations of their training data. They also lack true understanding, relying on learned patterns instead.

Scalability

Performance improves with larger models and extensive datasets, enhancing language understanding. However, increased size can lead to diminishing returns and higher computational costs.

Real-world Applications

LLMs are widely used in customer service, education, content creation, and even large language models in finance, enhancing user experiences through personalized interactions and efficient responses.

Future Directions

Ongoing research aims to improve model robustness and reduce biases. Exploring multimodal LLMs, which integrate various data types, holds promise for richer applications.

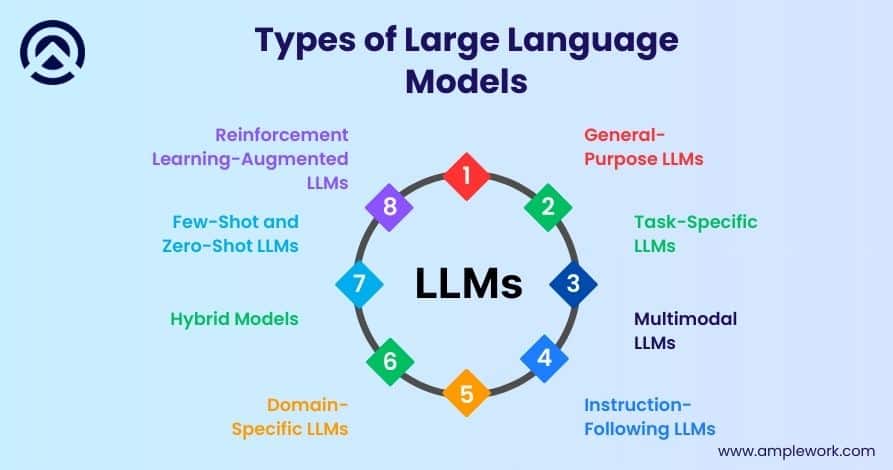

Types of Large Language Models

LLMs can be broadly categorized based on their architecture and application, each serving distinct use cases. Let’s explore the key types of LLMs and how they are used in various fields.

1. General-Purpose LLMs

General-purpose LLMs are designed to handle a broad range of language-based tasks. These models are highly versatile and can be applied to tasks like language translation, summarization, question answering, and text generation without needing extensive task-specific training. They are often the starting point for fine-tuning into more specialized models.

Use Cases:

- Chatbots: Automating customer service interactions.

- Content Creation: Generating blogs, articles, and product descriptions.

- Translation: Translating content into multiple languages for global audiences.

Examples:

- GPT (Generative Pretrained Transformer) by OpenAI: A powerful general-purpose language model.

- BERT (Bidirectional Encoder Representations from Transformers) by Google: Focused on understanding language context and relationships between words.

2. Task-Specific LLMs

Task-specific LLMs are built from general-purpose models but are fine-tuned for specific tasks such as sentiment analysis, question answering, or legal document analysis. These models are trained on domain-specific datasets to optimize their performance in specialized fields.

Use Cases:

- LLMs like BioBERT are used to extract information from clinical notes, aiding doctors in diagnosis and research. This way LLMs in healthcare play a vital role.

- Legal Services: LegalBERT is fine-tuned to analyze legal documents, making contract review more efficient.

Examples:

- BioBERT for biomedical text mining.

- LegalBERT for legal document processing.

3. Multimodal LLMs

Multimodal LLMs integrate various types of data, including text, images, and audio. By training these models to understand and generate language while also interpreting visual or auditory content, they become more versatile. Consequently, they are better suited for applications that require multimodal understanding.

Use Cases:

- LLMs in eCommerce enhance product recommendations by analyzing both text reviews and product images.

- Media & Entertainment: Generating captions for images or videos, improving content accessibility.

Examples:

- CLIP (Contrastive Language-Image Pretraining) by OpenAI, which can associate images with relevant textual descriptions.

4. Instruction-Following LLMs

These models are designed specifically to follow user instructions and perform tasks based on clear commands. They excel at providing responses that align with user prompts, making them ideal for automated customer service, personal assistants, and task automation.

Use Cases:

- Virtual Assistants: Automating tasks like email generation, calendar management, or answering common queries.

- Coding Assistance: Helping developers generate code based on specific instructions, improving productivity.

Examples:

- ChatGPT by OpenAI: A conversational agent that excels at following instructions and providing detailed responses.

5. Domain-Specific LLMs

These models exclusively train on data from specific industries or domains, such as legal, medical, or financial texts. Domain-specific LLMs specialize in handling tasks within their field, making them highly effective for industry-specific applications.

Use Cases:

- FinBERT analyzes financial sentiment to predict market trends and risks based on textual data. This is the best use case of LLMs in finance.

- Healthcare: Medical LLMs assist with diagnostics by analyzing patient records and medical literature.

6. Hybrid Models

Hybrid models combine language understanding with structured data, such as tables, graphs, or databases. These models are capable of processing and integrating unstructured text data with structured information, making them ideal for tasks that require multiple data types.

Use Cases:

- Business Intelligence: Extracting insights from a combination of textual data and structured datasets to generate reports and predictions.

- Knowledge Management: Automatically categorizing and summarizing vast amounts of unstructured text alongside structured data.

Examples:

- TABERT (Table-BERT) for table-based data processing combined with natural language understanding.

7. Few-Shot and Zero-Shot LLMs

Few-shot LLMs perform tasks with a small number of examples, enabling them to excel with minimal task-specific data. Zero-shot models complete tasks without seeing any task-specific examples, making them even more versatile.

Use Cases:

- Content Moderation: Detecting inappropriate content in new domains with minimal training data.

- Prototyping: Rapidly testing new product ideas or features with minimal resources.

Examples:

- GPT-3’s few-shot and zero-shot capabilities for text generation.

8. Reinforcement Learning-Augmented LLMs

These models combine language understanding with reinforcement learning techniques, allowing them to improve over time based on feedback. Reinforcement Learning-Augmented LLMs excel in dynamic environments that require continual learning.

Use Cases:

- Game Development: Improving in-game AI by learning from player interactions.

- Customer Interaction: Learning from past customer interactions to enhance future responses.

Examples:

- InstructGPT by OpenAI trained using Reinforcement Learning from Human Feedback (RLHF).

What are the Key Components of Large Language Models?

Large Language Models (LLMs) leverage advanced architectures and extensive training data to understand and generate human-like text. By combining various components, these models achieve remarkable capabilities in natural language processing and comprehension.

The key components of Large Language Models (LLMs) include the following:

1. Architecture

LLMs typically use transformer architecture, which allows for efficient processing of large datasets. This architecture includes mechanisms like self-attention that enable the model to weigh the importance of different words in a sentence, helping it understand the context better.

2. Training Data

LLMs are trained on vast amounts of text data from diverse sources such as books, articles, and websites. This extensive dataset helps the model learn language patterns, grammar, facts, and various topics, enhancing its ability to generate coherent and contextually relevant text.

3. Parameters

The number of parameters in an LLM is a critical factor in its performance. Parameters represent the weights that the model adjusts during training to enhance its predictions. Generally, larger models have more parameters, enabling them to create more complex representations of language.

4. Pre-training and Fine-tuning

LLMs undergo two main training phases. Pre-training involves learning from a large corpus of text without specific tasks, while fine-tuning tailors the model to specific applications or tasks, such as translation or question-answering, by training on smaller, task-specific datasets.

5. Tokenization

Before processing, text is divided into tokens (words or subwords). Tokenization helps the model understand and manage the input text efficiently, ensuring that it can generate responses based on the tokens it has learned.

6. Inference

After training, LLMs generate responses based on user prompts. During inference, the model uses the learned representations to predict the most likely next words or sentences, considering the context provided by the input.

7. Evaluation Metrics

To assess the performance of LLMs, various evaluation metrics are used, such as perplexity, accuracy, and BLEU scores. These metrics help determine how well the model performs on specific tasks and how effectively it generates human-like text.

By integrating these components, LLMs can effectively understand and generate natural language, making them powerful tools for various applications in natural language processing.

Also Read : How LLMs are Revolutionizing Business Operations and Customer Engagement

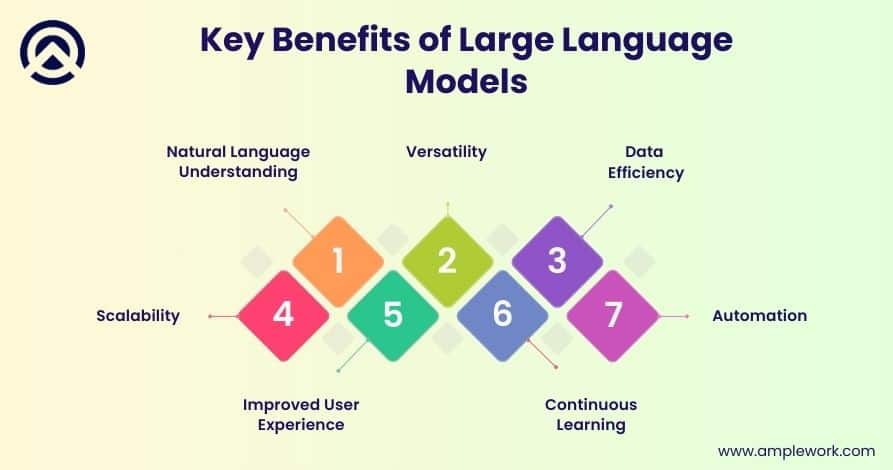

What are the Key Benefits of Large Language Models?

Large Language Models (LLMs) have revolutionized the way we interact with technology by enhancing our ability to process and understand natural language. Their powerful capabilities offer a wide range of benefits across various industries, driving innovation and efficiency. Here are the key benefits of Large Language Models (LLMs) based on the information from the provided link:

1. Natural Language Understanding

LLMs excel in comprehending and generating human-like text, enabling applications such as chatbots, virtual assistants, and language translation.

2. Versatility

You can fine-tune these models for various tasks, including summarization, content generation, sentiment analysis, and more, making them adaptable for different industries and use cases.

3. Data Efficiency

LLMs can learn from vast amounts of text data, allowing them to generate relevant and context-aware responses with fewer examples needed for training.

4. Scalability

They can handle a wide range of queries and tasks simultaneously, making them suitable for large-scale applications across businesses and organizations.

5. Improved User Experience

By enabling more intuitive interactions through natural language, LLMs enhance user engagement and satisfaction in applications like customer support and content creation.

6. Continuous Learning

You can update LLMs with new information and trends, keeping them current and enhancing their performance over time.

7. Automation

They can automate various language-related tasks, reducing the need for human intervention and streamlining processes across industries.

These benefits highlight the transformative potential of LLMs in various fields, from customer service to content creation and beyond.

Key Takeaways

- The performance of LLMs is evaluated using various metrics such as accuracy, fluency, and contextual relevance, which are essential for determining their effectiveness in real-world applications.

- The training data’s quality and diversity significantly impact an LLM’s performance, as larger and more varied datasets lead to better understanding and generation capabilities.

- Innovations in model architecture and training techniques have led to significant improvements in LLM performance, allowing them to tackle more complex language tasks with increased efficiency.

- LLMs are being integrated into numerous industries, including healthcare, finance, and entertainment, demonstrating their versatility and ability to enhance productivity and creativity.

- As LLMs become more prevalent, it is crucial to address ethical concerns surrounding their use, including biases in training data and the potential for misuse in generating misleading information.

How Can Amplework Help With LLMs?

Amplework is a leading AI development agency committed to harnessing the power of large-scale language models (LLMs) to elevate your business capabilities. Our team of experts specializes in delivering tailored large language model development services, ensuring that every solution aligns with your unique requirements. We seamlessly integrate these models into your existing systems, enabling a smooth transition and minimal disruption. Through focused training and fine-tuning with domain-specific data, we enhance the performance and relevance of each model. Our continuous support ensures your LLMs stay optimized and effective. By partnering with Amplework, you unlock the full potential of large-scale language models, driving innovation and operational efficiency across your organization.

- Tailored LLM Solutions

- Seamless Integration

- Intelligent Fine-Tuning

- Proactive Support & Maintenance

- Expert-Led Consultation

- Scalability & Flexibility

- Innovative Use Cases

Conclusion

In conclusion, as we’ve explored throughout this guide, large language models have reshaped the way we approach language processing tasks. With large language models explained in practical terms, it’s clear that their capabilities extend far beyond simple text generation. From enhancing customer experiences to streamlining workflows, the answer to what can large language models do lies in their versatility and depth. Embracing these tools means stepping into a future where communication, creativity, and automation align to drive real innovation.

Frequently Asked Questions (FAQs)

What is a large language model (LLM)?

A large language model (LLM) is an AI system that processes, understands, and generates human-like text by training on vast amounts of language data.

What are the key applications of large language models?

Large Language Models (LLMs) have transformed various industries by providing powerful solutions for natural language processing tasks. Their ability to understand and generate human-like text opens up a wide range of applications that enhance productivity and user experience. Here are the key applications of Large Language Models (LLMs)-

- Chatbots and Virtual Assistants

- Content Creation

- Translation Services

- Sentiment Analysis

- Summarization

- Personalized Recommendations

- Education and Tutoring

- Research and Data Analysis

- Creative Writing and Storytelling

- Code Generation

What is the future of Large Language Models?

The future of LLMs promises significant advancements in understanding and specialization, enabling more personalized and efficient interactions. With a focus on ethical development and integration across various sectors, LLMs are set to transform industries and user experiences alike.

- Improved Understanding

- Domain Specialization

- Real-time Interactivity

- Integration with IoT

- Ethical AI Development

- User Personalization

- Greater Accessibility

- Multimodal Capabilities

- Energy Efficiency

- Collaborative Learning

What are the top 10 most popular large language models?

Here are some widely recognized large language model examples:

- GPT-4 (Generative Pre-trained Transformer 4)

- BERT (Bidirectional Encoder Representations from Transformers)

- T5 (Text-to-Text Transfer Transformer)

- XLNet

- RoBERTa (Robustly optimized BERT approach)

- ChatGPT

- ERNIE (Enhanced Representation through Knowledge Integration)

- GPT-Neo and GPT-J

- FLAN-T5

- ALBERT (A Lite BERT)

What is retrieval-augmented generation (RAG)?

Retrieval-augmented generation (RAG) is a technique that enhances large language models by retrieving external information to improve accuracy and relevance in generated responses.

How are large language models (LLMs) built?

Large language models (LLMs) are built using transformer-based neural networks and trained on extensive datasets, requiring high computational power and advanced machine learning techniques.

sales@amplework.com

sales@amplework.com

(+91) 9636-962-228

(+91) 9636-962-228